Introduction

- To understand what deep learning is, we first need to understand the relationship deep learning has with machine learning, neural networks, and artificial intelligence. The best way to think of this relationship is to visualize them as concentric circles:

Neural networks are inspired by the structure of the cerebral cortex. At the basic level is the perceptron, the mathematical representation of a biological neuron. Like in the cerebral cortex, there can be several layers of interconnected perceptrons.

Machine learning is considered a branch or approach of Artificial intelligence, whereas deep learning is a specialized type of machine learning.

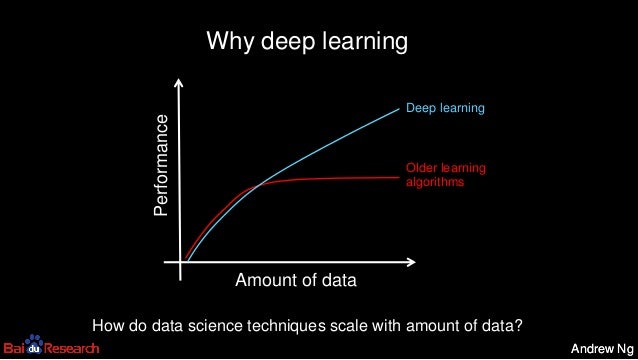

Why Deep Learning is Important?

Computers have long had techniques for recognizing features inside of images. The results weren’t always great. Computer vision has been a main beneficiary of deep learning. Computer vision using deep learning now rivals humans on many image recognition tasks.

Facebook has had great success with identifying faces in photographs by using deep learning. It’s not just a marginal improvement, but a game changer: “Asked whether two unfamiliar photos of faces show the same person, a human being will get it right 97.53 percent of the time. New software developed by researchers at Facebook can score 97.25 percent on the same challenge, regardless of variations in lighting or whether the person in the picture is directly facing the camera.”

Speech recognition is a another area that’s felt deep learning’s impact. Spoken languages are so vast and ambiguous. Baidu – one of the leading search engines of China – has developed a voice recognition system that is faster and more accurate than humans at producing text on a mobile phone. In both English and Mandarin.

What is particularly fascinating, is that generalizing the two languages didn’t require much additional design effort: “Historically, people viewed Chinese and English as two vastly different languages, and so there was a need to design very different features,” Andrew Ng says, chief scientist at Baidu. “The learning algorithms are now so general that you can just learn.”

Google is now using deep learning to manage the energy at the company’s data centers. They’ve cut their energy needs for cooling by 40%. That translates to about a 15% improvement in power usage efficiency for the company and hundreds of millions of dollars in savings.

ANNs are inspired by biological neurons found in cerebral cortex of our brain.

The cerebral cortex (plural cortices), also known as the cerebral mantle, is the outer layer of neural tissue of the cerebrum of the brain in humans and other mammals. - WikiPedia

read more about cerebral cortex at this link

ANNs are core of deep learning. Hence one of the most important topic to understand.

ANNs are versatile, scalable and powerfull. Thus it can tackle highly complex ML tasks like classifying images, identying object, speech recognition etc.

Biological Neuron

Biological Neuron produce short electrical impulses known as

action potentialswhich travels through axons to the synapses which releases chemical signals i.eneurotransmitters.When a connected neuron recieves a sufficient amount of these neurotransmitters within a few milliseconds, it fires ( or does not fires, think of a NOT gate here) its own action potential or elctrical impulse.

These simple units form a strong network known as Biological Neural Network (BNN) to perform very complex computation task.

The first artificial neuron

It was in year 1943, Artificial neuron was introduced by-

- Neurophysiologist Warren McCulloh and

- Mathematician Walter Pitts

They have published their work in

McCulloch, W.S., Pitts, W. A logical calculus of the ideas immanent in nervous activity. Bulletin of Mathematical Biophysics 5, 115–133 (1943). https://doi.org/10.1007/BF02478259. read full paper at this linkThey have shown that these simple neurons can perform small logical operation like OR, NOT, AND gate etc.

Following figure represents these ANs which can perform (a) Buffer, (b) OR, (c) AND and (d) A-B operation

These neuron only fires when they get two active inputs.

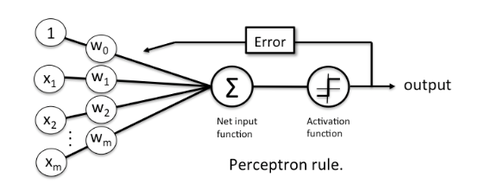

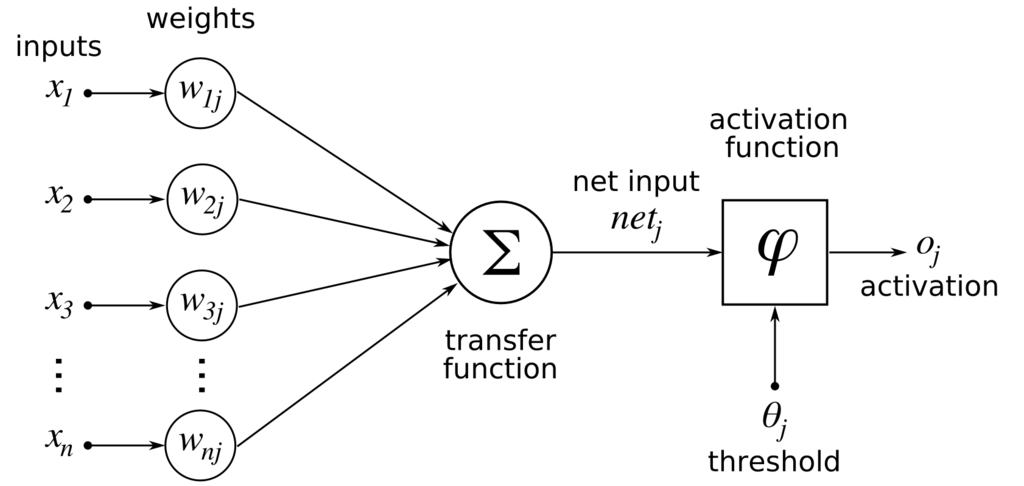

Its the simplest ANN architecture. It was invented by Frank Rosenblatt in 1957 and published as

Rosenblatt, Frank (1958), The Perceptron: A Probabilistic Model for Information Storage and Organization in the Brain, Cornell Aeronautical Laboratory, Psychological Review, v65, No. 6, pp. 386–408. doi:10.1037/h0042519It has different architecture then the first neuron that we have seen above. Its known as threshold logic unit(TLU) or linear threshold unit (LTU).

Here inputs are not just binary.

Lets see the architecture shown below -

Common activation functions used for Perceptrons are (with threshold at 0)-

- $$step(z)\ or\ heaviside(z) = \left{

01z<0z≥0

- Single TLUs are simple linear binary classifier hence not suitable for non linear operation. This is proved in the implimentation notebook with coding of simple logic gates.

- Rosenblatt proved that if the data is linearly separable then only this algorithm will converge which is known as Perceptron learning theoram

Some serious weaknesses of Perceptrons was revealed In 1969 by Marvin Minsky and Seymour Papert. Not able to solve some simple logic operations like XOR, EXOR etc.

But above mentioned problem were solved by implimenting multiplayer perceptron

Let's assume that you are doing a binary classification with class +1 and -1

Let there be decision function

which takes linear combination of certain inputs "

corresponding weights "

So in vector form we have

so

Now, if

for a sample

Lets simplify the above equation -

Suppose w_0 = - \theta and x_0 = 1

Then,

and

here

Comments

Post a Comment